70% Faster Deployments and High-Performance Private Network

It’s Day 2 of our Launch Week, and we’re excited to unveil our new networking stack!

If you're following us, you know that we're obsessed with performance: we want fast deployments and a speedy network once your apps are live.

Long story short: while working on optimizing deployments speed, we hit limitations with our old stack, we decided to revamp everything and built a new networking stack for the platform.

When you deploy on the platform, you get advanced capabilities out-of-the-box including automatic load-balancing, fully encrypted private networking, built-in observability, auto-healing, and automatic service discovery to name a few. All these features are tied to the networking stack.

To build this new network layer, we've replaced our previous setup, a forked Kuma Mesh, with a custom-built stack on top of Envoy and Cilium. We had to completely rewrite our GLB component (Global Load Balancer), move to a custom service mesh built on CoreDNS and backed by Consul DNS, and swap out sidecars for a power combination of Cilium and eBPF.

This brings three major improvements:

- 70% faster deployments: A new service now takes between a couple of seconds and 90 seconds to go live. Previously, it could take up to 5 minutes before. (Don't quote us on this if you have a 200GB model in your image (: )

- High-Performance Private Network: The built-in private network, or VPC, now provides up to 10 Gb/s of bandwidth.

- Improved deployment reliability: Network configuration propagation errors are gone!

This is now live in all regions and all new deployments happen on this new stack!

Faster deployments isn't even the half of it! We have more exciting news to share during our second Launch Week!

From Envoy sidecars with Kuma to eBPF processing with Cilium and... Envoy

When we started building Koyeb, we had to pick the right tools and technology to build and scale our platform in its early days.

For the network stack, we've always aimed to provide a built-in service mesh with the following key features:

- Multi-tenancy: network isolation for each user

- Service discovery: a service is addressable via an internal domain name

- Observability: Network calls between services may be traced and metrics can be collected

- Built-in load-balancing: to easily scale and autoscale horizontally services

- Resiliency: Automatic detection of instances not answering to health checks

- Zero Trust Network: mTLS in between each Instance

We picked Kuma, an open-source service mesh built on top of Envoy, to build our multi-region service mesh. Kuma helped us go from nothing to scaling 10s of thousands of applications running on the platform.

Then, we started to hit limitations as we scaled:

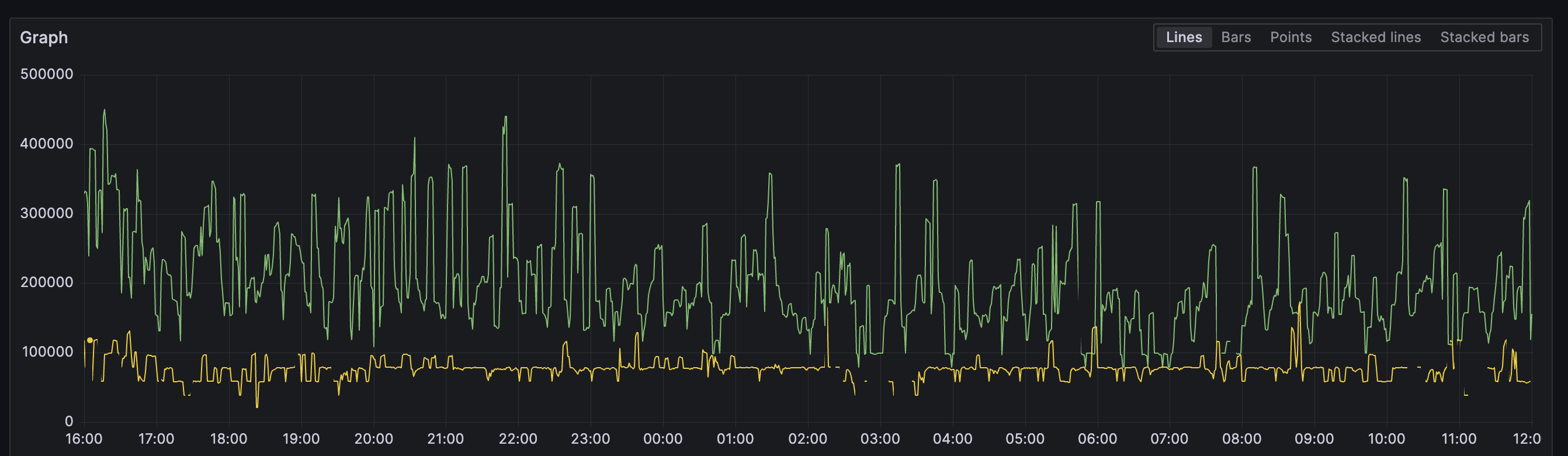

- Deployment speed: The relationship between networking and deployment speed is network convergence time. We want faster deployment times so you can deploy a critical patch in seconds and iterate quickly. Our machinery used to spend a lot of time computing network configurations and delivering them to a numerous amount of proxies, all over the world. The green line on the graph below is the propagation time before (with Kuma) vs our new network stack in yellow.

- Bandwidth and latency: Bandwidth and latency are critical for network-intensive apps like distributed databases and real-time applications. Kuma, like most service meshes, uses a sidecar model, which functionally adds latency to all requests flowing in and out of Koyeb instances. As data volumes are coming to the platform, you'll be able to deploy distributed databases where high-throughput networking matters

To ensure we can continue delivering the best possible experience, we decided to rethink our approach.

Long story short, we decided to drop Kuma and Envoy for networking and opt for a custom solution that is conceptually simpler and incredibly fast. By adopting Cilium and leveraging eBPF + Wireguard, network processing is now directly done in the Linux kernel with full encryption, eliminating the overhead associated with sidecar proxies. Load-balancing is still performed by Envoy proxies that are directly controlled by our control plane.

Curious to hear more? Stay tuned for our dedicated engineering blog post!

Faster deployments, reduced latency, and more bandwidth - our custom stack brings it all!

Changes and How to Use the New Networking Stack

What do you need to use the new stack? The answer is simple: nothing! You don't need to take any action—your applications will benefit from the performance improvements automatically.

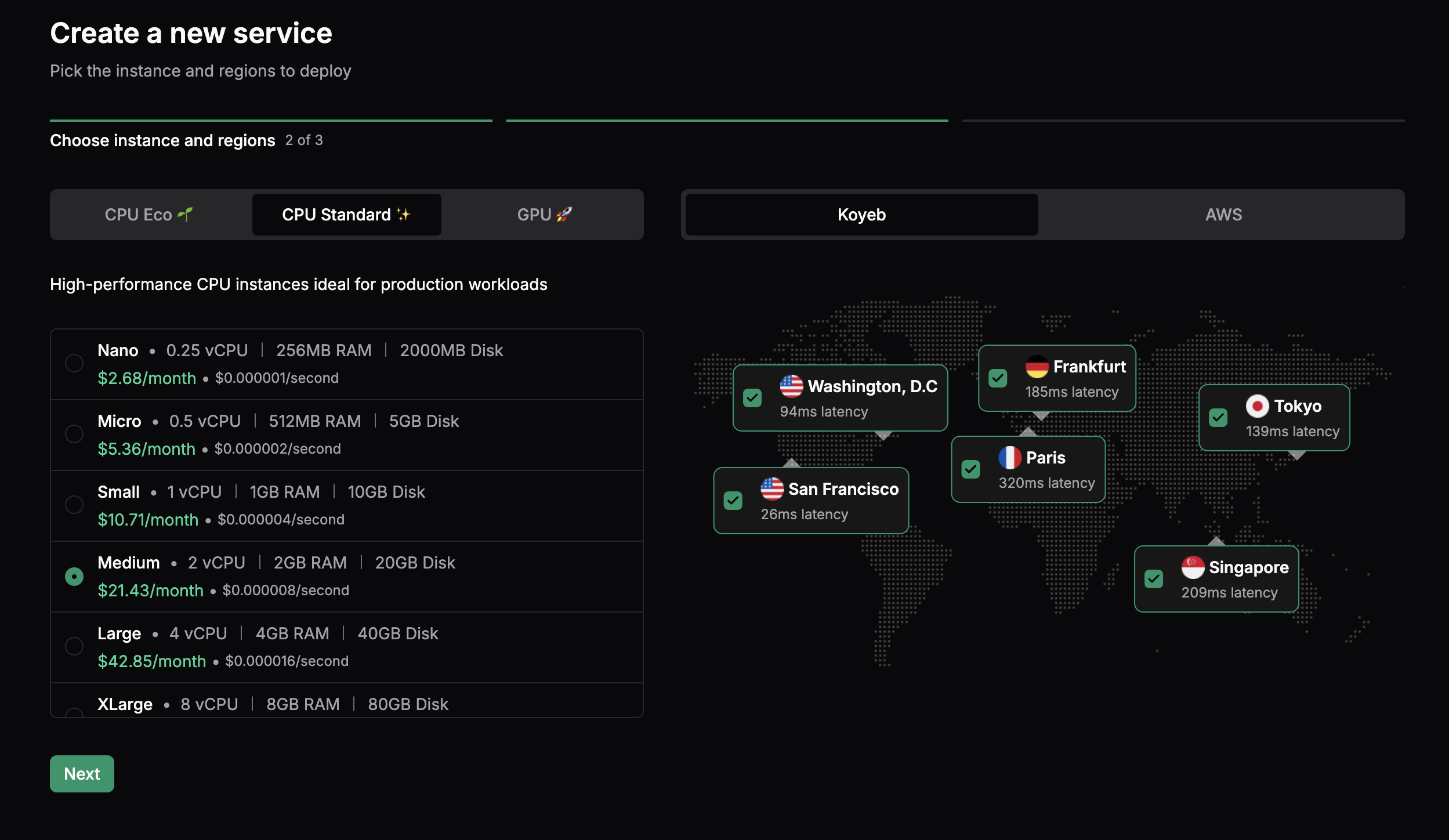

San Francisco, Washington D.C., Paris, Frankfurt, Singapore, and Tokyo - Our new networking stack is available in all Koyeb regions!

Technically, you'll see that instances can now be directly accessed inside of the private network as there is no sidecard anymore, making it easier to build clusters.

What’s Next

This is just the beginning. Our new networking stack lays the foundation for future improvements and innovations that will allow us to continue pushing the boundaries of what’s possible for cloud-native applications.

Stay tuned as we roll out even more exciting updates during Launch Week!

Faster deployments isn't even the half of it! We have more exciting news to share during our second Launch Week!