Tenstorrent Cloud Instances: Unveiling Next-Gen AI Accelerators

Today, we’re thrilled to announce the world premiere availability of Tenstorrent Instances via the Koyeb Serverless Platform. You can now access the Wormhole multi-chip solution in minutes to bring up and test frontiers of model inference performance.

You've probably heard us say this: we're committed to bringing alternative accelerators to market to foster innovation in the AI infrastructure space. We're super excited to officially introduce Tenstorrent’s unique scale-out architecture and open-source software stack on the platform.

This strategic partnership combines Koyeb’s hardware-agnostic cloud infrastructure and Tenstorrent’s next-generation AI hardware to enable on-demand access to Tenstorrent's Tensix Processor and to the open-source TT-Metalium SDK.

Concretely, we're introducing two new instance types:

- TT-N300S: With one n300s, this instance has 24GB of GDDR6, 192MB of SRAM, provides up to 466 FP8 TFLOPS, and comes with 64GB of RAM with 4 vCPU.

- TT-Loudbox: With 4x n300s meshed together, this instance has 96GB of GDDR6, 768MB of SRAM, provides up to 1864 FP8 TFLOPS, and comes with 256GB of RAM with 16 vCPU.

These new instances come with all the native features of the Koyeb platform to bring developers the fastest way to build AI models and run inference workloads in on-demand environments, with zero infrastructure management. Developers can take advantage of all the capabilities of the platform, including:

- Instant Development Environments with the ability to deploy containers running the Tenstorrent open-source SDKs in a minute

- Fast NVMe volumes to persist data in development environments

- Continuous deployment with automatic build of your Dockerfiles

- Scale-to-zero and autoscaling for production serverless inference endpoints

- Built-in observability to analyze logs and metrics

Accelerating access to Tenstorrent's hardware for developers is essential to Tenstorrent's open-source approach:

On demand access enables developers to experience Tensix processors in a minute - allowing them to develop, test and run their models faster. Cloud access is important to us because we want developers to be able to try our technology and see that we are serious about their experience and their feedback before committing to ownership. This is one of the ways we enable them to own their future.

Jim Keller, CEO of Tenstorrent

Keep reading to learn more and head to our get started section to see what you can build today!

It's available right now in private preview, deploy now.

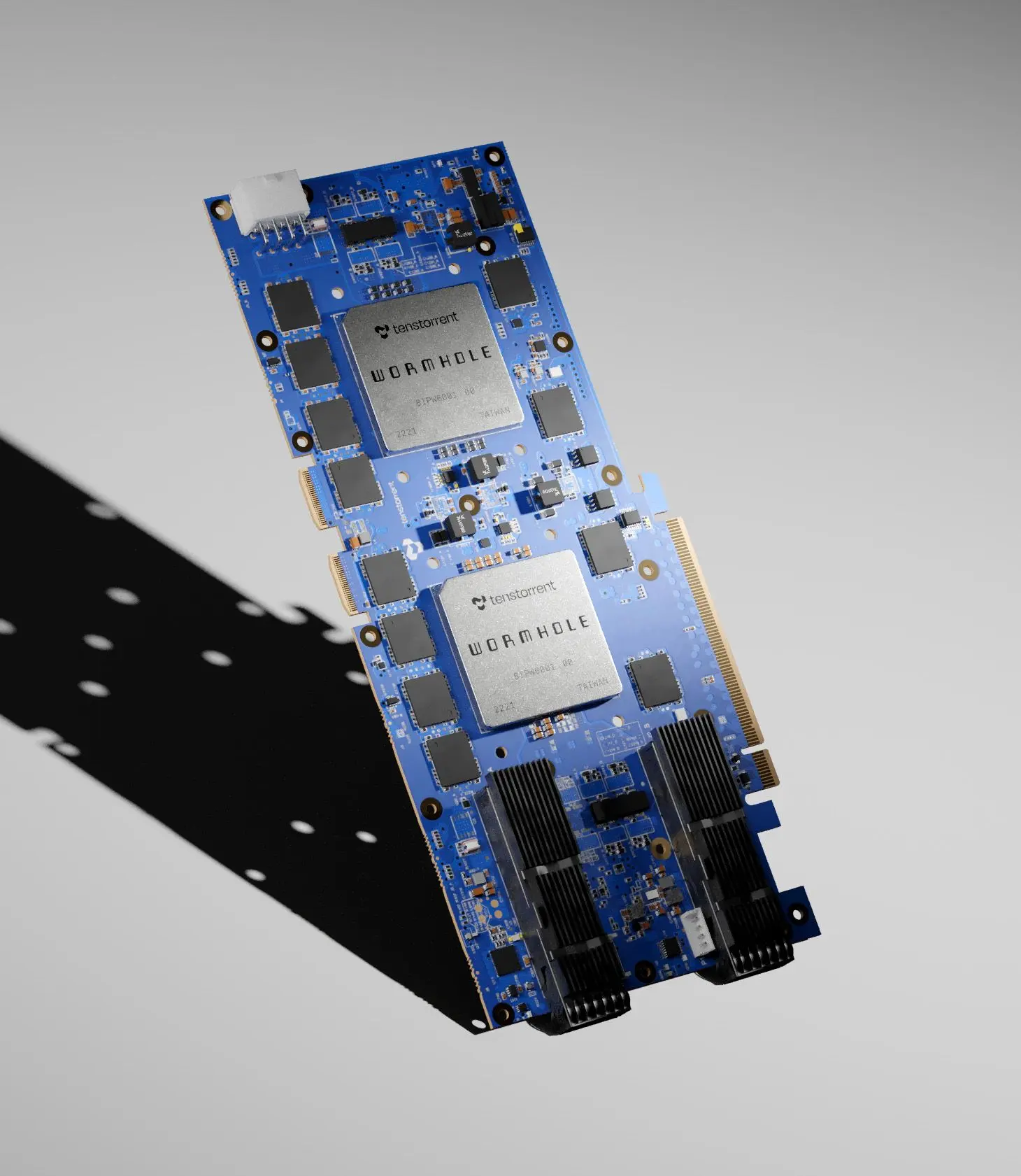

Tenstorrent Wormhole and Instances Specifications

All instances released today are equipped with Tenstorrent Wormhole n300s cards. Wormhole is Tenstorrent’s first generation Tensix Processor, built with Tensix Cores, each of which includes a compute unit, Network-on-Chip, local cache, and “baby RISC-V” cores, resulting in uniquely efficient data movement through the chip.

Development on these cards is supported by Tenstorrent’s open source TT-NN and TT-Metalium SDKs.

The Wormhole ASIC is designed to scale, featuring a Network-on-Chip (NoC) that offers 3.2 Tbps of Ethernet connectivity to surrounding chips, and we're releasing an instance equipped with four meshed n300s leveraging this capability. If you're familiar with the Tenstorrent ecosystem, this is equivalent to a TT-LoudBox system in terms of accelerator computing.

Here are the full specifications of the two instances we're introducing:

| TT-N300S | TT-Loudbox | |

|---|---|---|

| Memory (GDDR6) | 24GB | 96GB |

| SRAM | 192MB | 768MB |

| FP8 | 466 TFLOPS | 1864 TFLOPS |

| FP16 | 131 TFLOPS | 524 TFLOPS |

| BLOCKFP8 | 262 TFLOPS | 1048 TFLOPS |

| vCPU (AMD Genoa) | 4 | 16 |

| RAM (DDR5) | 64GB | 256GB |

Wormhole supports a larget set of data precision formats: FP8, FP16, BFLOAT16, FP32 (Output Only), BLOCKFP2, BLOCKFP4, BLOCKFP8, INT8, INT32 (Output Only), UINT8, TF32, VTF19, and VFP32.

Get Started with On-Demand Tenstorrent Access

To get started and deploy your first service using Tenstorrent instances, we’ve packaged two one-click applications to provide a ready-to-use environment.

Development Environment with VSCode Integration

Using this one-click application, you will be able to connect a Wormhole instance and establish a connection from your VSCode editor to Koyeb.

Before getting started, ensure you have the Remote - Tunnels extension installed in your VSCode editor.

Once the service is created and deployed, follow the instructions displayed in the Koyeb service logs to register the instance and connect to it from your VSCode editor.

That's it for the configuration! Next, to connect from your local VSCode editor:

- Press

cmd + shift + p(Mac) orctrl + shift + p(Windows/Linux) to open the command palette - Run the command

Connect To Tunnel..., and select GitHub as the account type - Your Koyeb instance should appear in the list of available devices with the default name

tt-instance. Select it to instantiate a VSCode instance running on the remote Koyeb service.

Once connected, you'll have an environment with everything needed to get started. A great way to familiarize yourself with Tenstorrent and run your first models is by using TT-Studio, which provides a simple and friendly way to rapidly run inference on Tenstorrent hardware.

Development Environment using Tailscale for SSH access

Alternatively to the previous method, and in scenarios you need to get direct SSH access to the Tenstorrent instance, you can use the following one-click app offering SSH access to the instance using Tailscale.

To use this method, you will need a Tailscale account and to create an auth key to authenticate the instance to your tailnet.

Make sure to replace the TAILSCALE_AUTH_KEY environment variable with your own auth key as the value before deploying.

Once the service is created and deployed, the instance will appear in your Tailscale machines list and you will be able to connect it using SSH.

In addition to those two one-click applications, you can deploy any pre-built containers or directly connect your GitHub repository and let Koyeb handle the build of your applications.

Start Building with Tenstorrent Today

We’re thrilled to bring Tenstorrent’s next-generation AI hardware to Koyeb, offering developers the fastest way to build AI models and run inference workloads in on-demand environments.

Sign up for the platform today and get started deploying on Tenstorrent instances.

We can't wait to see what you’ll build and deploy with Tenstorrent instances on Koyeb!

Come celebrate AI infra innovations with us! 🎉

We're organizing special meetups with Tenstorrent to celebrate the launch of Tenstorrent Instances on Koyeb. Join us for live demos, deep dives into Tenstorrent's architecture, Q&A with our teams, and more.